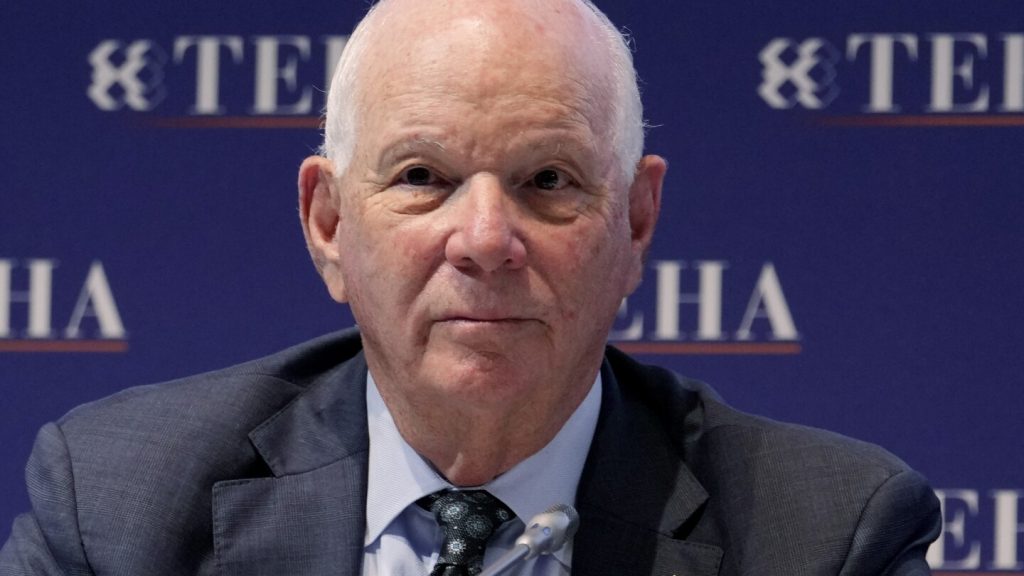

A recent incident involving an advanced deepfake operation targeting Senator Ben Cardin, the Democratic chair of the Senate Foreign Relations Committee, has raised concerns about the use of artificial intelligence to deceive political figures in the United States. The scheme involved someone posing as Dmytro Kuleba, the former Ukrainian Minister of Foreign Affairs, who tried to engage Cardin in a video call with politically charged questions. The Senator and his staff became suspicious of the caller’s identity and quickly ended the call, seeking verification from the Department of State that it was not actually Kuleba.

Cardin described the encounter as a deceptive attempt by a malign actor to pose as a known individual and engage in conversation. The Senator took swift action by alerting relevant authorities, and a comprehensive investigation is now underway. This incident highlights the growing threat posed by generative artificial intelligence, which can be used to alter videos, audio, and images with increasing sophistication. Past instances of AI-powered scams, such as a finance worker falling victim to a scammer posing as a company executive, have raised concerns about the potential for deepfake technology to be used in malicious activities.

Experts warn that recent advancements in AI technology have made deepfake schemes like the one targeting Cardin not only more believable but also easier to conduct. Cybersecurity expert Rachel Tobac noted that integrating live video and audio deepfakes has become simpler, making it harder to detect fake content. Artificial intelligence professor Siwei Lyu expressed concerns about the potential for future incidents involving deepfake attacks, which could target individuals for political or financial gain. The Senate Security memo emphasized the need for staff to verify meeting requests and remain cautious, as more attempts are expected in the coming weeks.

Former White House cybersecurity policy expert R. David Edelman described the deepfake operation as a sophisticated intelligence scheme that combined AI technology with traditional intelligence tactics. The perpetrators likely exploited the existing relationship between Cardin and the Ukrainian official to create a believable scenario for the deception. With the increasing accessibility of AI technology for malicious purposes, the risk of similar attacks targeting politicians, financial institutions, and vulnerable populations like seniors is a growing concern. As security officials and AI experts anticipate more incidents like the one involving Cardin, there is a pressing need for enhanced vigilance and proactive measures to prevent and respond to deepfake threats.