Nvidia’s remarkable success can be attributed to its rapidly growing data center business, which saw a 427% increase in the latest quarter, driven by the increasing demand for artificial intelligence processors. The company is now assuring investors that customers who are investing billions of dollars in its chips will see a return on their investment in AI. This is a crucial factor for the company as clients can only spend so much on infrastructure before expecting profits. Nvidia’s ability to offer strong and sustainable returns on investment indicates that the AI industry still has significant growth potential as it moves beyond its early development stages.

Nvidia’s primary clients for its graphics processing units are major cloud providers such as Amazon Web Services, Microsoft Azure, Google Cloud, and Oracle Cloud, accounting for a considerable portion of the company’s data center sales. Additionally, there is a new wave of specialized GPU data center startups that purchase Nvidia’s GPUs, integrate them into server racks, connect them to the internet, and rent them out to customers by the hour. For instance, CoreWeave, a GPU cloud provider, currently charges $4.25 per hour to rent an Nvidia H100, which is crucial for training large language models like OpenAI’s GPT. This model of renting out server time enables many AI developers to access Nvidia hardware.

Following a strong earnings report, Nvidia’s finance chief Colette Kress informed investors that cloud providers were experiencing an immediate and strong return on investment. She highlighted that for every $1 spent on Nvidia hardware, cloud providers could rent it out for $5 over the next four years. Kress also mentioned the enhanced return on investment from newer Nvidia hardware like the HDX H200 product, which combines 8 GPUs and allows access to Meta’s Llama AI model, generating $7 in revenue over four years for every $1 spent on the servers. Factors like chip utilization play a role in determining the return on investment.

Nvidia CEO Jensen Huang shared that major companies like OpenAI, Google, and others, as well as thousands of emerging generative AI startups, are lining up to acquire GPUs from cloud providers. The demand for GPUs is high, with customers pressuring Nvidia to quickly deploy systems to meet their needs. Meta, for example, has plans to invest billions in 350,000 Nvidia chips, despite not being a cloud provider. This investment is expected to be monetized through Meta’s advertising business or by integrating AI capabilities into its existing apps. As Meta builds its cluster of servers, they are considered essential infrastructure for AI production, forming what Huang refers to as AI factories.

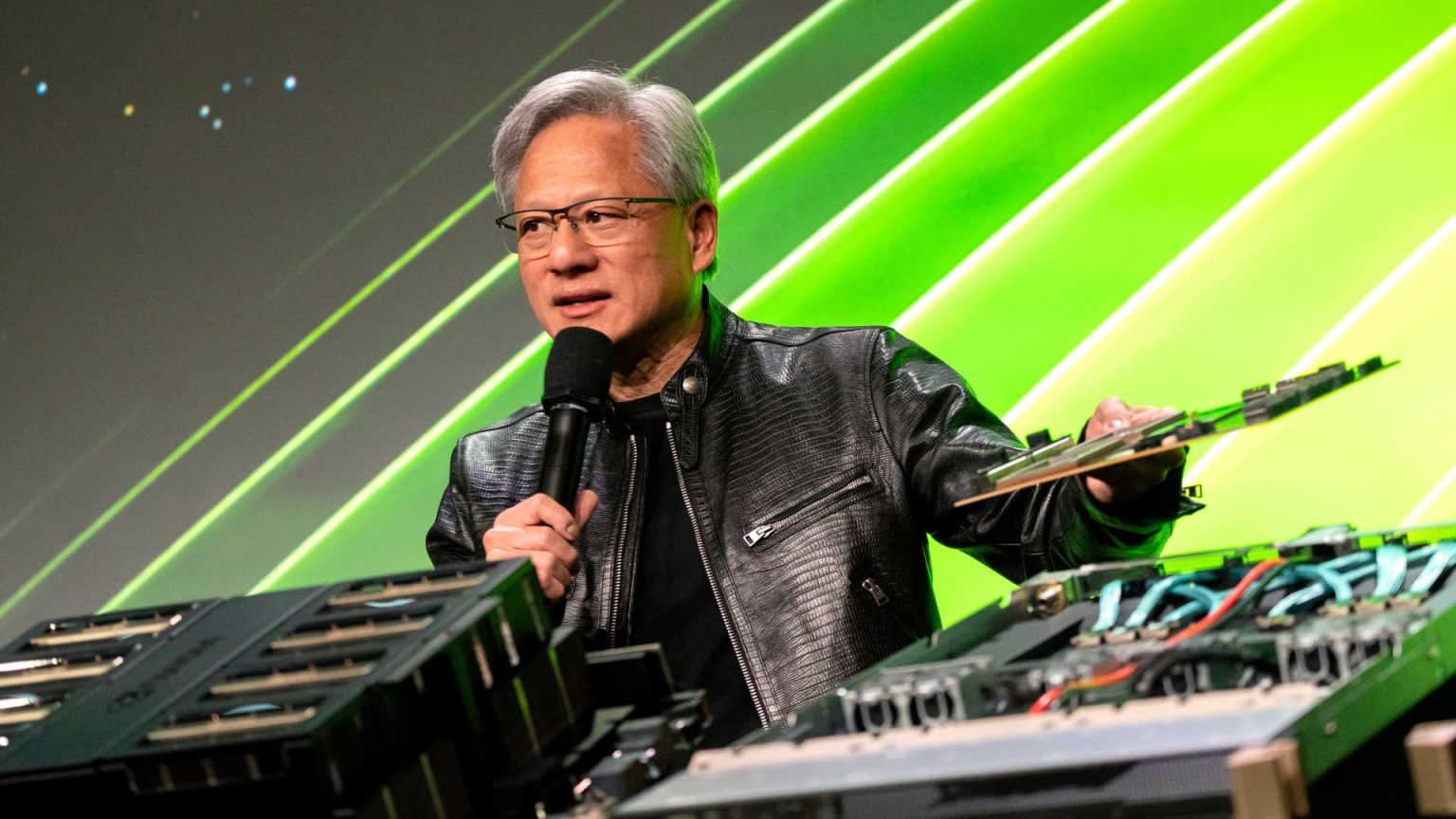

Nvidia also announced a next-generation GPU called Blackwell, which will be available in data centers in the fiscal fourth quarter, alleviating concerns of a slowdown in demand as companies wait for the latest technology. The initial customers for the new chips include major tech giants like Amazon, Google, Meta, Microsoft, Oracle, and Tesla. Following the positive earnings report and future developments, Nvidia’s stock price surged past $1,000 for the first time. Additionally, the company revealed plans for a 10-for-1 stock split after experiencing a 25-fold increase in its share price over the past five years. These announcements underscore Nvidia’s strong performance and optimism for continued growth in the AI industry.