Meta, the company that owns Instagram, announced new tools on the platform to combat sexual extortion of teens. One of the features includes auto-blur technology that will automatically blur photos containing nudity in direct messages. This is part of a campaign to fight sexual scams and make it harder for criminals to contact teens, aiming to protect people from unwanted nudity and scammers who trick people into sending their own images. Sexual extortion, or sextortion, occurs when someone coerces another person into sending explicit photos and then threatens to make those images public unless the victim pays money or engages in sexual favors.

One recent case involved two Nigerian brothers who pleaded guilty to sexually extorting teen boys across the country, which led to tragic consequences. In another case, a 28-year-old former Virginia sheriff’s posed as a teen online to obtain nude pics from a 15-year-old girl, sexually extorted and kidnapped her at gunpoint, resulting in violence and destruction. The FBI has warned parents to monitor their children’s online activity due to the rising number of sextortion cases. The nudity protection feature on Instagram will be on by default for users under 18, and adult users will be encouraged to activate it. Warnings on the platform will remind users to be careful about sending explicit photos and chatting with strangers, giving them the option to block senders and report inappropriate chats.

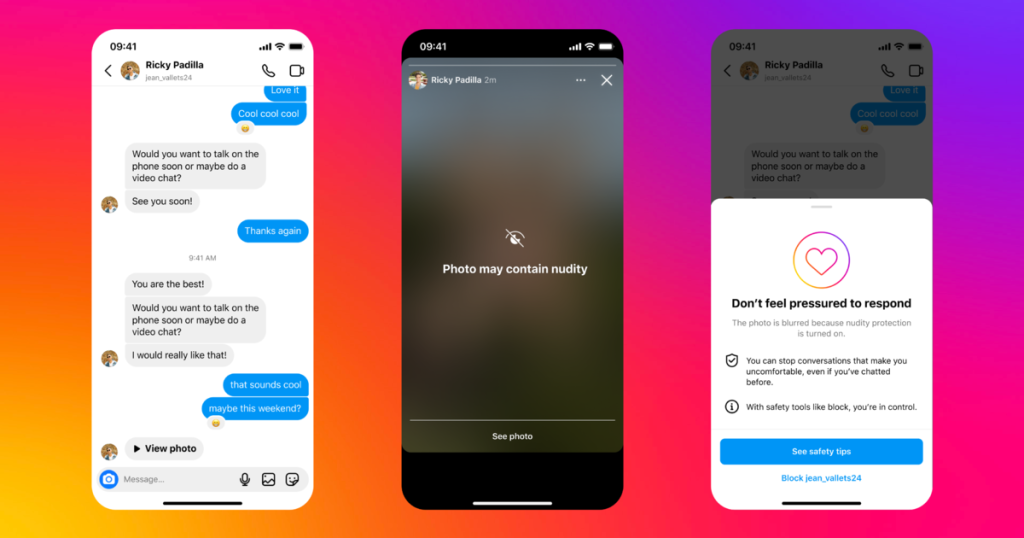

In addition to the auto-blur feature, a warning will prompt users to decide whether or not they want to view the image, along with options to block the sender and report the chat. Users sending direct messages with nudity will receive reminders to be cautious and the ability to unsend photos if they change their minds. To prevent scammers and sexual predators from targeting young people, Meta is increasing restrictions by not showing the message button on a teen’s profile to potential sextortion accounts. Children’s advocates praised Meta’s new measures, hoping they will encourage reporting by minors and reduce online child exploitation. The company’s efforts to protect vulnerable users and prevent sexual extortion are seen as a positive step in the right direction.

Overall, Meta’s updated tools on Instagram aim to enhance safety and security for users, particularly teens who are vulnerable to sexual extortion and online predators. The auto-blur technology for nudity protection in direct messages is one of the features introduced to combat sexual scams and make it tougher for criminals to contact teens. The platform’s warnings and reminders about sending explicit photos and chatting with strangers emphasize the importance of caution and provide users with options to block, report, and unsend photos if needed. By expanding restrictions and removing the message button on teen profiles for potential sextortion accounts, Meta is taking proactive steps to prevent child exploitation and keep young people safe online.

The documented cases of sexual extortion highlight the serious consequences of such crimes, including tragic outcomes and violence. The FBI’s warning to parents underscores the need for vigilance in monitoring children’s online activities to protect them from predatory behavior. Meta’s efforts to combat sexual extortion on Instagram through new features and enhancements are seen as a positive development by children’s advocates and organizations dedicated to child safety. These measures are expected to increase reporting by minors, curb the circulation of online child exploitation, and ultimately create a safer online environment for all users. As the fight against sexual scams and predators continues, Meta’s commitment to protecting vulnerable individuals and preventing harm is vital in addressing the pervasive issue of sexual extortion on social media platforms.