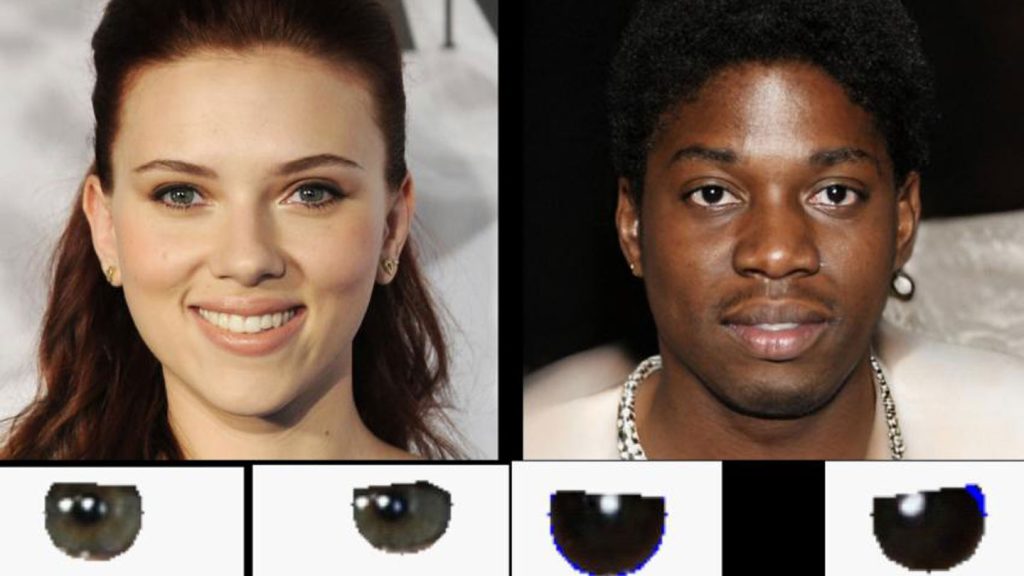

Researchers at the University of Hull in England have found that eye reflections can be used as a potential way to detect AI-generated images of people. The team utilized a technique commonly used by astronomers to study galaxies, where light reflections in the eyeballs are compared to identify inconsistencies. In real images, the reflections match up, showing the same number of elements like windows or lights, while in fake images, there are often inconsistencies in the reflections due to incorrect physics. This research was presented at the Royal Astronomical Society’s National Astronomy Meeting in Hull by observational astronomer Kevin Pimbblet and graduate student Adejumoke Owolabi.

The team used a computer program to analyze the reflections in the eyes of the images and calculated a Gini index based on the pixel values, representing the intensity of light in each pixel. The Gini index, originally developed to measure wealth inequality in a society, is used by astronomers to understand light distribution in galaxy images. By comparing the Gini indices of the reflections in the left and right eyeballs, the researchers were able to determine the authenticity of the image. They found that for around 70% of the fake images analyzed, the difference in Gini indices was significantly higher compared to real images, where there was minimal to no difference. This difference in the Gini indices can indicate that an image may be a deepfake.

While the technique can be useful in detecting deepfakes, it is not foolproof and should be used as part of a comprehensive approach to identify AI-generated images. For instance, a real image may exhibit characteristics of a fake image if the person blinks or if they are positioned too close to a light source, resulting in only one eye showing a reflection. The researchers suggest that a human should review images flagged by the technique to confirm the presence of fakery. Although the method could also work on videos, Pimbblet notes that it is not a definitive solution for detecting fakery, but rather a helpful tool that could be part of a series of tests until AI technology improves in replicating reflections accurately.

In conclusion, the University of Hull research team has developed a novel method for detecting AI-generated images by analyzing reflections in the eyes of the subjects. By calculating the Gini index based on the intensity of light in the reflections, the researchers could identify discrepancies that indicate the likelihood of an image being a deepfake. This technique could be a valuable tool in the fight against misinformation and fraudulent content, although it has limitations and should be used in combination with other detection methods. As advancements are made in AI technology, such approaches may become increasingly important in verifying the authenticity of visual content.